The AI we deserve ⊗ The “AI is fake and sucks” debate ⊗ What would a world with abundant energy look like?

No.337 — The alchemy that powers the modern world ⊗ Building A Circular Future ⊗ Open Source AI year in review 2024

The AI we deserve

Evgeny Morozov writing a “forum” (he wrote an article and responses are published alongside it, from the likes of Brian Eno, Audrey Tang, Terry Winograd, and Bruce Schneier) for the Boston Review. The central argument, or question, is “if we could turn back the clock and shield computer scientists from the corrosive influence of the Cold War, what kind of more democratic, public-spirited, and less militaristic technological agenda might have emerged?”

It’s a 30 minute read so there’s no way I can summarise the thing with any kind of acceptable breadth. He goes back through the history of AI, mentioning all the usual suspects, like Dartmouth in 1956, Cybernetics in Chile, etc. The intriguing angle is that Morozov critiques the foundational ideas of artificial intelligence, arguing that it reflects bureaucratic rationality by the “Efficiency Lobby,” and is too goal-driven, rather than a tool for enhancing human intelligence.

He references Hans Otto Storm’s use of the eolith, stones who’s form inspires their use by man, although “modern archaeologists doubt that eoliths are the result of this kind of human intervention,” it’s a useful metaphor for a kind of inventive flâneur who is not goal-driven but following their “imagination, curiosity, and originality.” “These may be of little interest to the Efficiency Lobby, but should they be dismissed by those who care about education, the arts, or a healthy democratic culture capable of exploring and debating alternative futures?”

Morozov does acknowledge that deep learning, thanks to its hallucinations and being “powered by data and statistics,” does allow for “more heterogeneous and open-ended uses.” Yet, as we’ve seen in previous issues, it is seen as a bug, not a feature, and he ponders what could have been, concluding that “had the early AI community paid any attention to John Dewey and his work on ‘embodied intelligence,’ many false leads might have been avoided. One can only wonder what kind of AI—and AI critique—we could have had if its critics had looked to him rather than to Heidegger. But perhaps it’s not too late to still pursue that alternative path.”

In such moments of boredom, he argued, we disengage from the urgency of goals, experiencing the world in a more open-ended way that hints at a broader, fluid, contextual form of intelligence—one that involves not just the efficient achievement of tasks but a deeper interaction with our environment, guiding us toward meaning and purpose in ways that are hard to formalize. […]

Probably influenced by his connections to the pragmatists, Veblen discovered a different force at work: what he called “idle curiosity,” a kind of purposeless purpose that drove scientific discovery. This tension between directed and undirected thought would become crucial to Storm’s own theoretical innovations. […]

This longing for the heterogeneous over the rigid is not something people or societies are expected to outgrow as they develop. Instead, it’s a fundamental part of human experience that endures even in modernity. In fact, this striving might inform the very spirit—playful, idiosyncratic, vernacular, beyond the rigid plans and one-size-fits-all solutions—that some associate with postmodernity. […]

We might call it, in contrast, ecological reason—a view of intelligence that stresses both indeterminacy and the interactive relationship between ourselves and our environments. […]

There’s immense value in demonstrating—through real-world prototypes and institutional reforms—that untethering these tools from their market-driven development model is not only possible but beneficial for democracy, humanity, and the planet.

Weighing in on the “AI is fake and sucks” debate

I was going to jump over the debate around Casey Newton’s recent article, The phony comforts of AI skepticism. Then I saw that Dave Karpf wrote about it (this one), and so did Gary Marcus, so I paid a bit of attention. Karpf starts by recapping the debate, and I almost dropped off again, but then he presents his own argument and not only did I finish reading, I decided it would go in the newsletter.

Here’s how Karpf frames Newton’s argument and the debate: “‘AI is fake and sucks’ vs ‘AI is real and dangerous.’ He then made the case for why the first camp is immune to evidence, and the second camp is basically right.” You can read any and all of the links above, I wanted to share the exchange for these two bits, which I find true and useful for most debates around technology:

So the real question is whether we should offer more grace to the underestimating skeptics or the overestimating tech evangelists. […]

My instinct, and I suspect this is true for much of my intellectual camp, is to calibrate how much grace I offer to incorrect predictions based on how much power was behind them.

In other words; predictions, as product pitches or as critiques, are not just correct or not. If Musk prevents public transport investment with his hyperloop fantasy, that’s a real problem. If Gary Marcus is wrong when he insists that “the techniques that produced GPT4 were limited, and there would be decreasing returns from further scale” (looks like he’s right), there are much fewer impacts.

This kind of debate is often framed around optimism or pessimism but the burden of proof (at least our level of skepticism) should be much higher for people who yield massive power. Because the aftermath is much more important.

The return on investment doesn’t come from $20/month subscription fees. It comes from disrupting large, existing industries like health care, law, higher education, and military defense. That’s the ambition. So, before we fire all the radiologists, we should probably ask some skeptical questions about whether GPT5/OpenAI 03/Claude 7/Grok 2.0 will really be able to live up to the marketing promises. […]

And, for the companies and executives with multi-billion dollar resources and ambitions — the “tech barons,” broadly speaking, I think we should pay very close attention the promises they make about a glorious, inevitable technological future that never quite arrive […]

And the tech billionaires who have bet their companies futures on these products keep insisting that we not fixate on the shit futures they are in the process of manufacturing, because instead we ought to marvel at their latest product demo while imagining the endless possibilities.

What would a world with abundant energy look like?

I’ve linked to articles and interview with Deb Chachra this year, and to her book How Infrastructure Works. Yet I’m also sharing this interview for two reasons. One, you should know about Deb’s work and you might not have picked up the book, so read this ;). Two, it’s focused on her “abundant energy” angle, which I have been mildly obsessed with. Not in the sense of researching left and right on the topic, but rather in how often “proof” pops up. Cases where newly plentiful and cheap renewable solar or wind energy makes previously impossible things possible. As I’ve mentioned before, some people I also respect are on the “other side” and don’t believe in the abundant world angle (beyond the Jevons paradox). Still trying to figure this out.

“The effect of having the ‘I need to keep my head down and stop bad things from happening’ versus the ‘head up, looking forward, using the privilege we have to try to build a world that’s better’ is a very different position. […]

Many places are never going to pass through the fossil fuel stage. They’re going to leapfrog the fossil fuel stage and go straight into harvesting the renewable energy that’s available in their local environment and doing that in a decentralized way. […]

What I tell my students is that it’s not their job to stop climate change or to stop these bad things from happening. It’s to build this world of abundant, equitable, resilient, sustainable energy and agency for everyone.

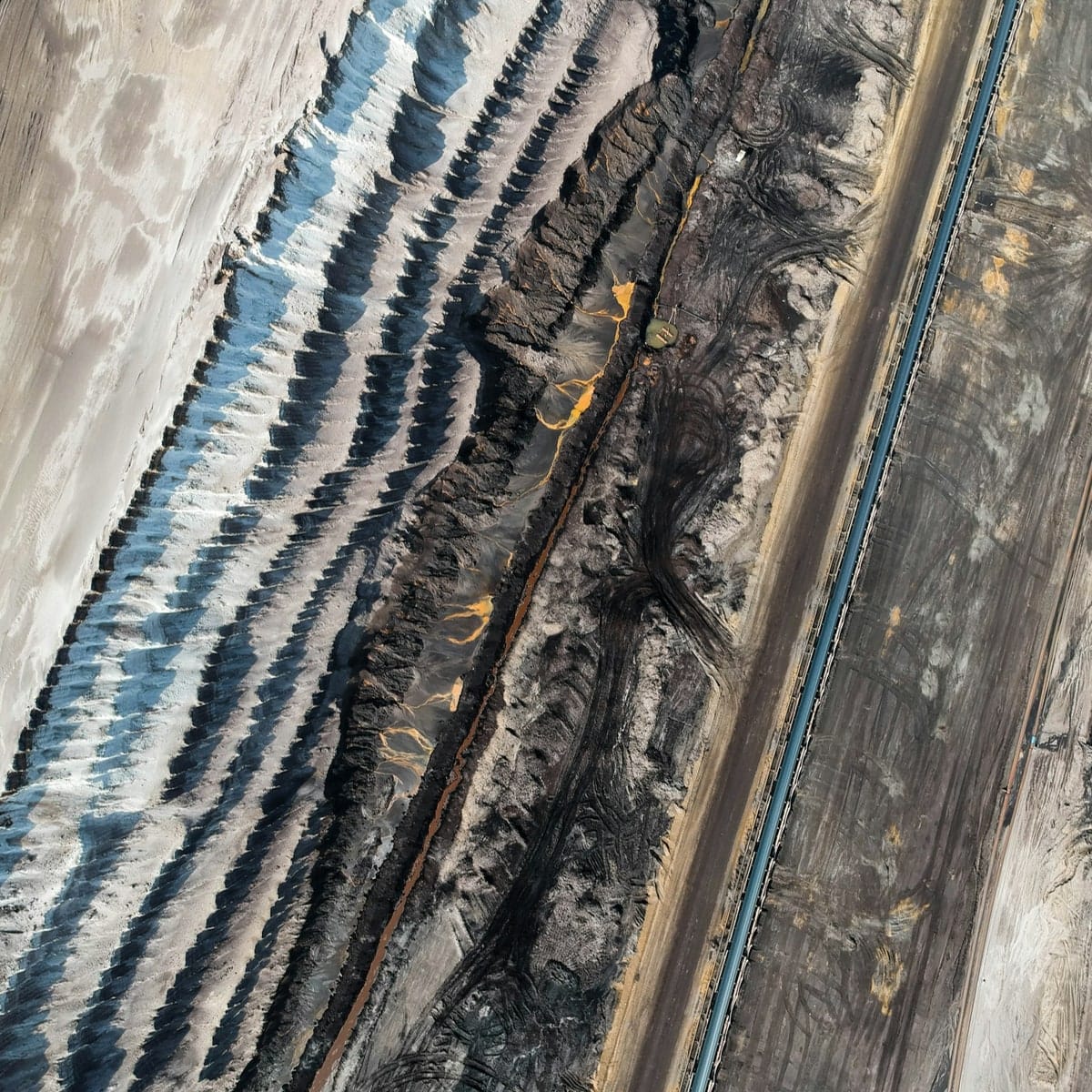

§ The alchemy that powers the modern world. “There are no solutions, no technologies, no social or economic developments that bring only benefits … every development, however positive, also has some kind of downside. There are always winners and losers. The shift to renewable energy will ultimately benefit most people, but, in the process, it will impose a steep price on some people.”

Let’s work together

Hi, I’m Patrick, the curator and writer of Sentiers. I notice what’s useful in our complex world and report back. I call this practice a futures thinking observatory. This newsletter is only part of what I find and document. If you want a new and broader perspective on your field and its surroundings, I can assemble custom briefings, reports, internal or public newsletter, and work as a thought partner for leaders and their teams. Contact me to learn more.

Futures, Fictions & Fabulations

- Note: I’m playing it a bit fast and loose with the theme of the section this week.

- Building A Circular Future. “Building on the continuous conversations within the network, the publication Building a circular future: Insights from interdisciplinary research stresses the urgency of systemic transformation in the built environment. It advocates for a holistic approach, considering climate, society, nature, and the global economy.”

- Niantic Agents. “Agents is a short film that suggests a fresh, intuitive mental model for spatial computing. It imagines that all of our apps and button based interfaces have evolved into animate spirits, from personal assistants, to dating agents and local guides that haunt real world locations.” (Via the NFL Discord.)

- Missives from the Future (Tense), a HOLO dossier led by Claire L. Evans. I linked previously to this other dossier but while you’re there, also have a look at the Future Festivals Field Guide.

Algorithms, Automations & Augmentations

- Open Source AI year in review 2024. An advent calendar of AI stats by the team at Hugging Face, starting with pretty good predictions by the CEO.

- Dissertation: Cyborg Psychology: The Art & Science of Designing Human-AI Systems that Support Human Flourishing. “This dissertation introduces “Cyborg Psychology,” an interdisciplinary, human-centered approach to understanding how AI systems influence human psychological processes.”

- Can’t say I’ve spent any time on this yet but: OpenAI’s video-making tool Sora takes photorealistic but fake video mainstream ◎ Video is AI’s new frontier – and it is so persuasive, we should all be worried ◎ It sure looks like OpenAI trained Sora on game content — and legal experts say that could be a problem. Surprise, surprise. (Not.)

Built, Biosphere & Breakthroughs

- Bitcoin miner buys Texas wind farm to take it off power grid. “According to MARA, the company will take the wind farm off the energy grid and, instead, use what it produces to power its Bitcoin mining operation in its North Texas location.” Because of course.

- Spain introduces paid climate leave after deadly floods. “Several companies came under fire after the 29 October catastrophe for ordering employees to keep working despite a red alert issued by the national weather agency. … The new measure aims to ‘regulate in accordance with the climate emergency’ so that ‘no worker must run risks.’”

- Small reductions to meat production in wealthier countries may help fight climate change. “Small cutbacks in higher-income countries—approximately 13% of total production—would reduce the amount of land needed for cattle grazing, the researchers note, allowing forests to naturally regrow on current pastureland.”

Asides

- Radical Talismans. “Radical Talismans are precious objects for precious information. Each talisman contains a programmable NFC tag that can store information, and be scanned by a phone. Community members can carry these small stones with them, and use them to share sensitive information with one another.” All different things, but I found the use of the word “talisman” interesting alongside recent mentions like Knowledge Objects as Gravitational Talismans and Amulets Against the Spirits of the Age.

- Nokia Design Archive: online treasure trove of tech history. “Aalto University launches the Nokia Design Archive, an online repository that charts the pioneering history of Finland’s legendary mobile phone manufacturer.” Back when phones weren’t just glass rectangles.

- Notre-Dame Reopens in Paris After a Fire. It’s Astonishing. (Long scroll at The New York Times). On NOVA, Rebuilding Notre Dame. (Both via The Dice — 028.)